티스토리 뷰

* Variational Autoencoder

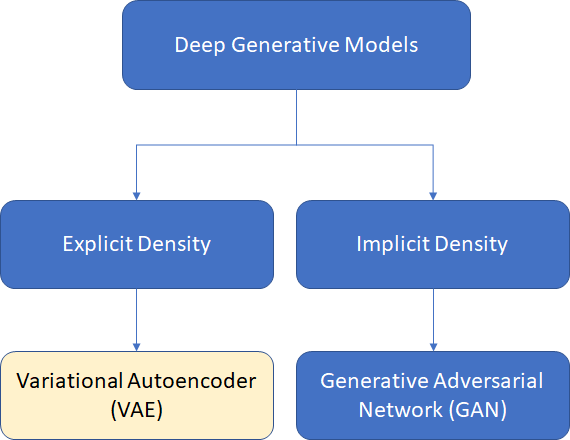

생성 모델 중 VAE 정리 진행 중 (영상 대신 1d 시그널 생성 모델)

# coding: utf-8

# In[1]:

import os

import keras

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

# In[2]:

def sinosoidal(v):

fix_wave = np.arange(100)

return (fix_wave**0.2)*np.sin(0.3*fix_wave + v) + v

# In[3]:

spectrum_y = np.random.normal(size=(1000,1),scale= 0.5)

spectrum_x = np.vstack([sinosoidal(i[0]) for i in spectrum_y])

xmean,xstd = spectrum_x.mean(), spectrum_x.std()

ymean,ystd = spectrum_y.mean(), spectrum_y.std()

stdz_x = (spectrum_x - xmean)/xstd

stdz_y = (spectrum_y - ymean)/ystd

total_size = len(stdz_x)

rand_idx = np.random.permutation(total_size)

x_train = stdz_x[rand_idx][:int(0.7*total_size)]

x_valid = stdz_x[rand_idx][int(0.7*total_size):int(0.8*total_size)]

x_test = stdz_x[rand_idx][int(0.8*total_size):]

# In[4]:

for i in stdz_x:

plt.plot(i)

# In[ ]:

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from keras.layers import Lambda, Input, Dense

from keras.models import Model

from keras.datasets import mnist

from keras.losses import mse, binary_crossentropy

from keras.utils import plot_model

from keras import backend as K

import numpy as np

import matplotlib.pyplot as plt

import argparse

import os

# reparameterization trick

# instead of sampling from Q(z|X), sample epsilon = N(0,I)

# z = z_mean + sqrt(var) * epsilon

def sampling(args):

"""Reparameterization trick by sampling from an isotropic unit Gaussian.

# Arguments

args (tensor): mean and log of variance of Q(z|X)

# Returns

z (tensor): sampled latent vector

"""

z_mean, z_log_var = args

batch = K.shape(z_mean)[0]

dim = K.int_shape(z_mean)[1]

# by default, random_normal has mean = 0 and std = 1.0

epsilon = K.random_normal(shape=(batch, dim))

return z_mean + K.exp(0.5 * z_log_var) * epsilon

def plot_results(models,

data,

batch_size=128,

model_name="vae_mnist"):

"""Plots labels and MNIST digits as a function of the 2D latent vector

# Arguments

models (tuple): encoder and decoder models

data (tuple): test data and label

batch_size (int): prediction batch size

model_name (string): which model is using this function

"""

encoder, decoder = models

x_test, y_test = data

os.makedirs(model_name, exist_ok=True)

filename = os.path.join(model_name, "vae_mean.png")

# display a 2D plot of the digit classes in the latent space

z_mean, _, _ = encoder.predict(x_test,

batch_size=batch_size)

for i, yi in enumerate(grid_y):

for j, xi in enumerate(grid_x):

z_sample = np.array([[xi, yi]])

x_decoded = decoder.predict(z_sample)

digit = x_decoded[0].reshape(digit_size, digit_size)

figure[i * digit_size: (i + 1) * digit_size,

j * digit_size: (j + 1) * digit_size] = digit

# MNIST dataset

print(spectrum_x.shape, spectrum_y.shape)

original_dim = spectrum_x.shape[1]

# network parameters

input_shape = (original_dim, )

intermediate_dim = 3

batch_size = 64

latent_dim = 2

epochs = 5000

# VAE model = encoder + decoder

# build encoder model

inputs = Input(shape=input_shape, name='encoder_input')

x = Dense(intermediate_dim, activation='relu')(inputs)

z_mean = Dense(latent_dim, name='z_mean')(x)

z_log_var = Dense(latent_dim, name='z_log_var')(x)

# use reparameterization trick to push the sampling out as input

# note that "output_shape" isn't necessary with the TensorFlow backend

z = Lambda(sampling, output_shape=(latent_dim,), name='z')([z_mean, z_log_var])

# instantiate encoder model

encoder = Model(inputs, [z_mean, z_log_var, z], name='encoder')

encoder.summary()

plot_model(encoder, to_file='vae_mlp_encoder.png', show_shapes=True)

# build decoder model

latent_inputs = Input(shape=(latent_dim,), name='z_sampling')

x = Dense(intermediate_dim, activation='relu')(latent_inputs)

# outputs = Dense(original_dim, activation='sigmoid')(x)

outputs = Dense(original_dim)(x)

# instantiate decoder model

decoder = Model(latent_inputs, outputs, name='decoder')

decoder.summary()

plot_model(decoder, to_file='vae_mlp_decoder.png', show_shapes=True)

# instantiate VAE model

outputs = decoder(encoder(inputs)[2])

vae = Model(inputs, outputs, name='vae_mlp')

models = (encoder, decoder)

reconstruction_loss = mse(inputs, outputs)

reconstruction_loss *= original_dim

kl_loss = 1 + z_log_var - K.square(z_mean) - K.exp(z_log_var)

kl_loss = K.sum(kl_loss, axis=-1)

kl_loss *= -0.5

vae_loss = K.mean(reconstruction_loss + kl_loss)

vae.add_loss(vae_loss)

vae.compile(optimizer='adam')

vae.summary()

plot_model(vae,to_file='vae_mlp.png',show_shapes=True)

vae.fit(x_train, epochs=epochs, batch_size=batch_size, validation_data=(x_test, None), verbose=1)

vae.save_weights('vae_mlp_mnist.h5')

# plot_results(models, data, batch_size=batch_size, model_name="vae_mlp")

# In[26]:

x_decoded = decoder.predict(np.random.random((1000,2))*3)

# In[27]:

for i in x_decoded:

plt.plot(i)

Origin spectrum

VAE generator spectrum

'딥러닝' 카테고리의 다른 글

| [keras] GAN 이해 및 구현 (0) | 2020.01.19 |

|---|---|

| [keras] ResNet (residual block) (1) | 2020.01.12 |

댓글

공지사항

최근에 올라온 글

최근에 달린 댓글

- Total

- Today

- Yesterday

링크

TAG

- numpy

- 네이버웹툰

- Digital watermarking

- 캡처방지

- Residual Block

- gPRC

- DW

- flask serving

- backpropagation

- implementation

- dct

- DWT-DCT

- SvD

- tensorflow serving

- keras

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

글 보관함